Designing Explainable AI Beyond Predictions

As AI systems increasingly inform real decisions, understanding why a model behaves the way it does becomes as important as the prediction itself. Teams need explanations for many reasons: regulatory review, internal validation, stakeholder communication, and long-term trust in automated systems.

Explainable AI (XAI) addresses this need by making model behavior understandable — not just to ML practitioners, but to the broader group of people responsible for using, approving, and explaining AI-driven outcomes.

This project explores XAI as a designed experience, not just a technical artifact.

One System, Multiple Perspectives

In real organizations, no single dashboard serves everyone. ML practitioners, product teams, domain experts, and stakeholders all interact with models differently — and require different kinds of explanations.

This demo represents one perspective within a larger XAI system: a dashboard focused on understanding patterns, cohorts, and reasoning, rather than training or tuning models. Other dashboards — such as those for model debugging or performance optimization — would surface different details for different audiences.

The Models Behind the Demo

The demo uses two complementary models to illustrate how explainability changes with complexity:

Decision Tree Classifier

A decision tree classifier predicts outcomes by following a series of human-readable rules, branching on feature values to form a clear path to a decision. In this demo, it is visualized directly in the interface, providing a transparent baseline for understanding how a model structures its reasoning.

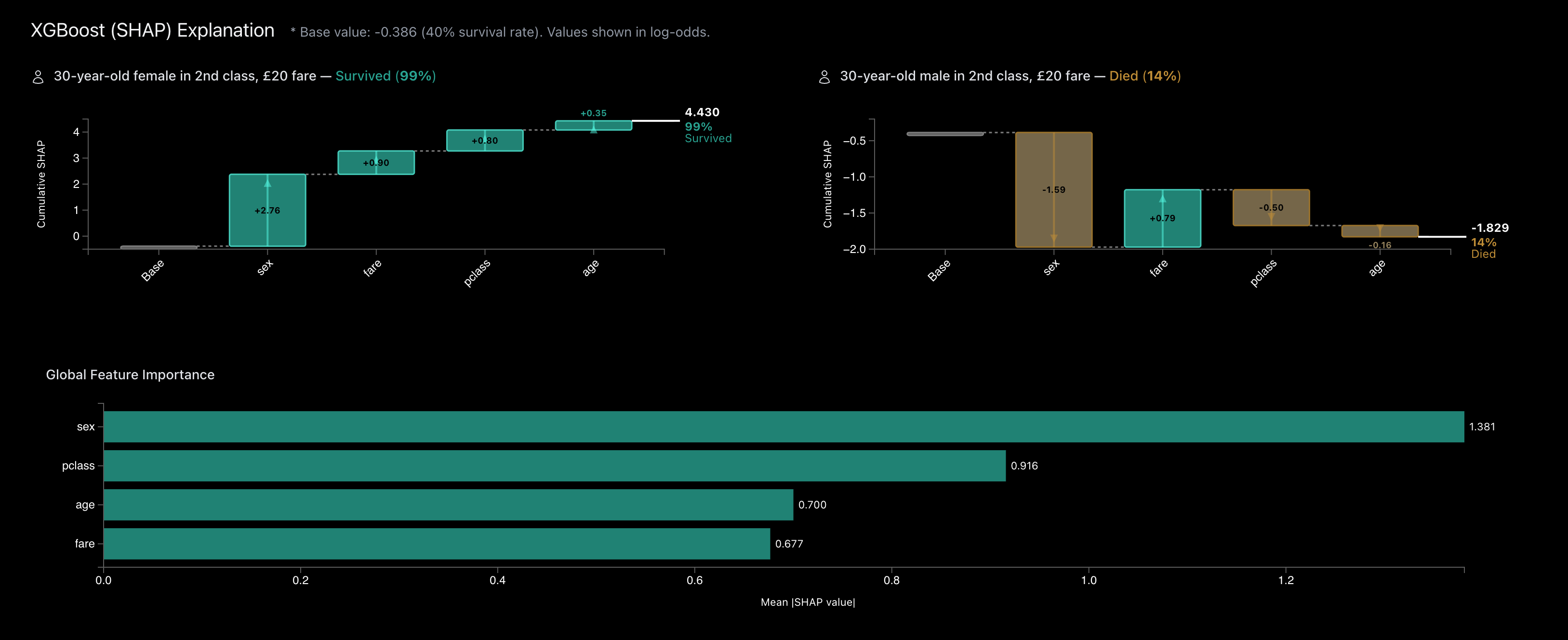

XGBoost Model

XGBoost is a high-performing ensemble model that combines many decision trees to make predictions. Because its internal logic is not directly human-readable, this demo explains its behavior using SHAP values, revealing which factors influence predictions both globally and for individual cases.

From Dashboards to Dialogue

Traditional analytics dashboards require users to discover insights by manually filtering, slicing, and comparing data. This works, but it places the burden of pattern-finding entirely on the user. This demo introduces a different approach: a conversational assistant layered on top of cohort analysis.

Instead of asking users to hunt for patterns, the assistant:

- Guides exploration through questions and prompts

- Helps surface meaningful cohort comparisons

- Translates visual patterns into concise explanations

- Supports "what should I look at next?" moments

To keep the focus on interaction design rather than AI capabilities, the assistant in this demo is intentionally bounded. It operates over a predefined set of cohorts and limited variations, simulating how a conversational layer could guide exploration without attempting to answer arbitrary questions. The goal is not to present a fully general chatbot, but to demonstrate how conversation can complement visual analysis in explainable AI systems.

What This Demo Is Designed to Support

This dashboard is intended to support tasks such as:

- Validating whether model behavior aligns with expectations

- Preparing explanations for stakeholders or reviewers

- Exploring cohort-level patterns to assess consistency

- Building trust through transparency and narrative clarity

It is not intended to replace model development tools, but to complement them.

The Lens to Use While Exploring

As you explore the demo, keep this question in mind:

"Do the model's patterns make sense in this context — and can I explain them to someone else?"

That question sits at the heart of explainable AI — and at the center of this project.